Prompt Optimization is now generally available

Today we are releasing a new SOTA prompt optimization algorithm with significant accuracy and efficiency gains, generally available now through our API. Prompt Optimization is an agentic prompt rewriting system that automatically optimizes and adapts prompts across models, significantly enhancing accuracy and outperforming days of manual prompt engineering in minutes of background processing.

As foundation models continue to proliferate, organizations need the ability to evaluate new models, adopt open-source alternatives, and shift workloads across providers based on accuracy, latency, cost, or regulatory requirements. In practice, many AI applications become tightly coupled to the first model they are built on because prompts don't transfer across models.

The prompt layer is where lock-in actually happens

Prompts tuned for one model often fail to transfer cleanly to another. Migrating typically requires weeks of manual rewrites and testing. This creates a powerful form of vendor lock-in that is operational rather than contractual.

One of the most acute examples is model upgrades in production. Teams switch to a newer model expecting a lift, and instead see accuracy regressions and new edge cases. When you’re operating at high accuracy requirements, fixing that manually means days of prompt iteration and eval runs.

On the other end of the spectrum are model deprecations, which force rushed migrations under tight timelines and pull engineers off roadmap work.

Leading in AI requires being prepared for monthly nondeterministic updates to the core dependencies of our applications on a monthly basis. This is a completely different paradigm from traditional software engineering, and once teams scale past one or two applications managing this manually becomes all but impossible. Prompt Optimization turns this combinatorial complexity into an enormous edge for frontier teams.

State-of-the-art results across benchmarks

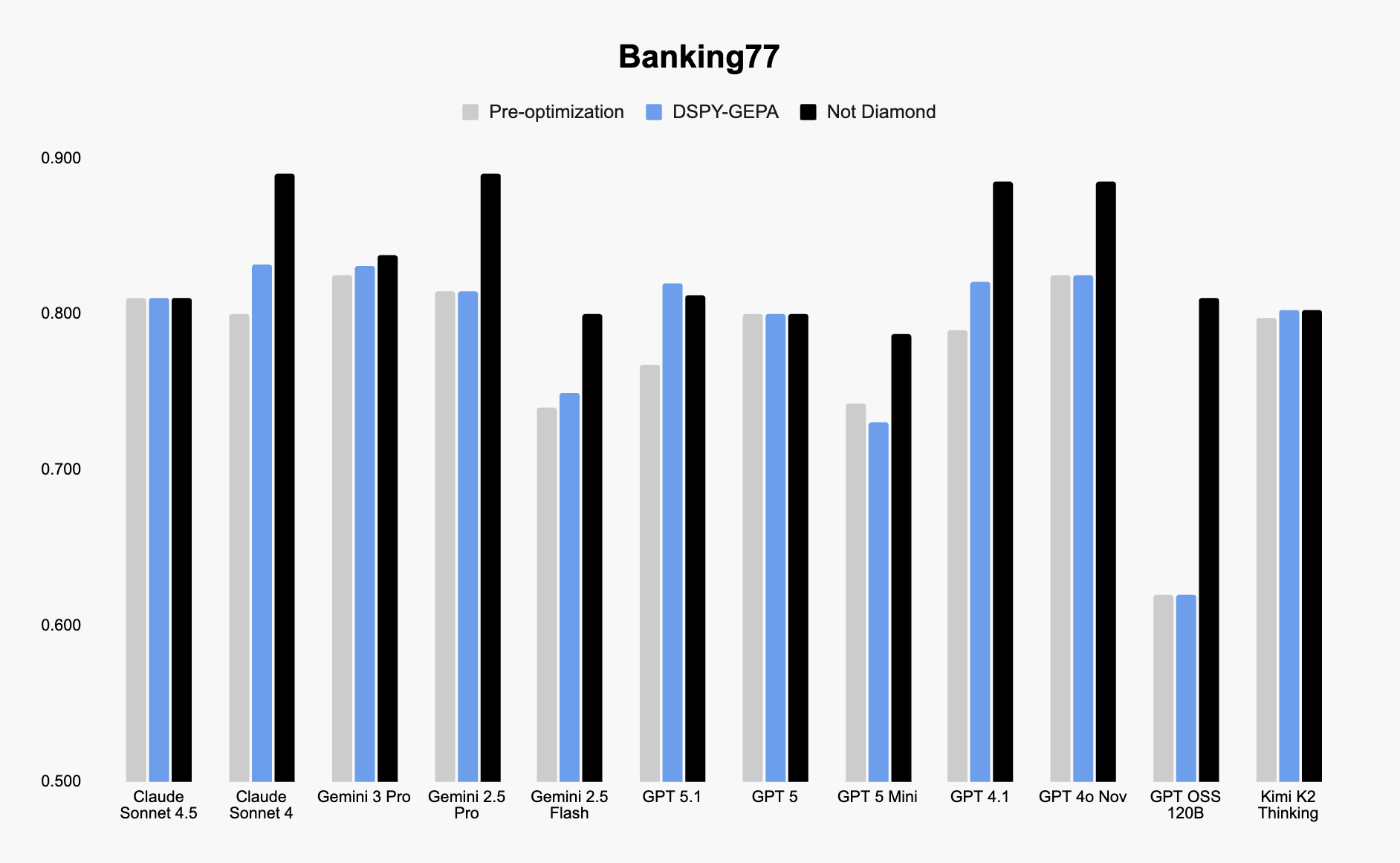

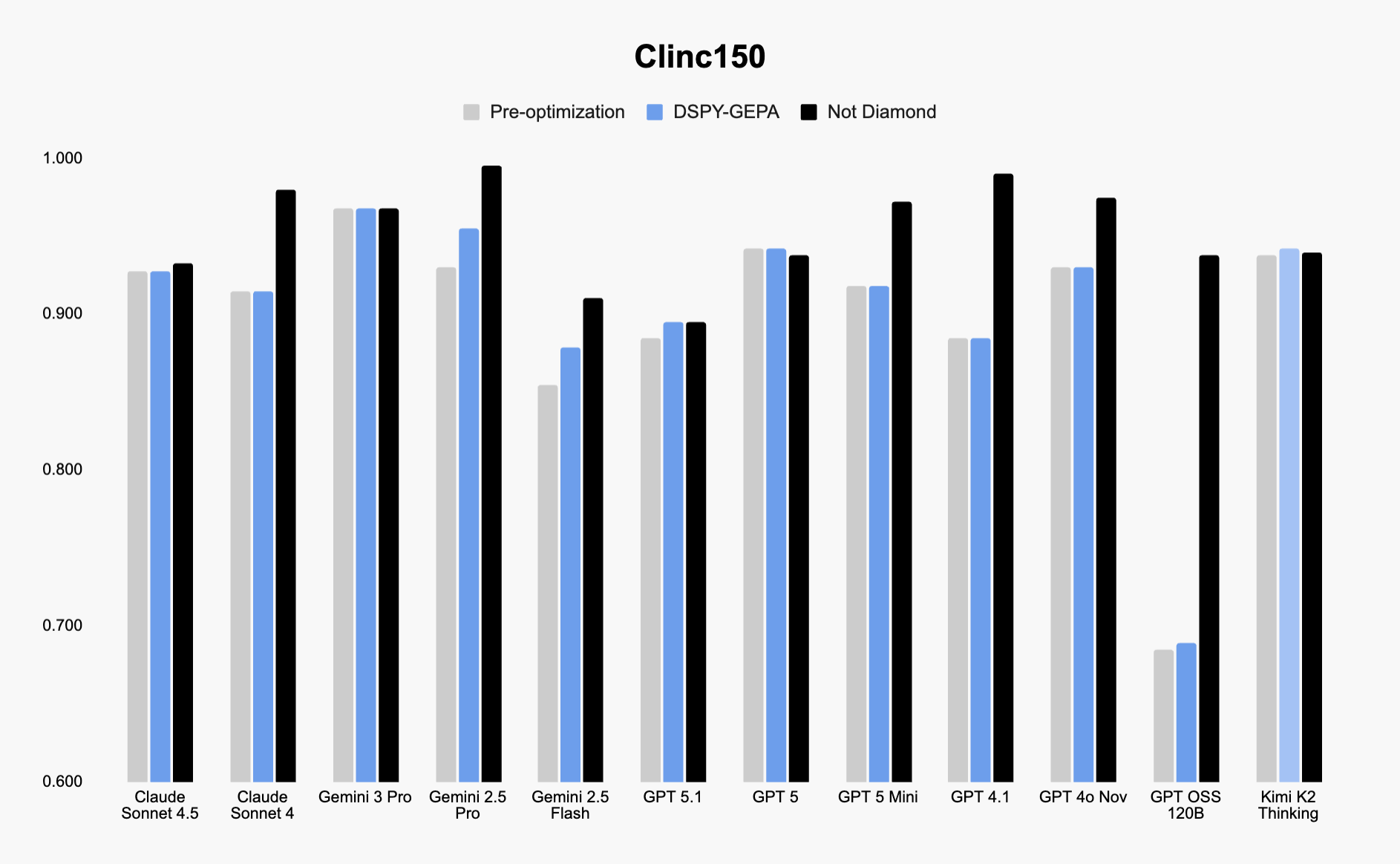

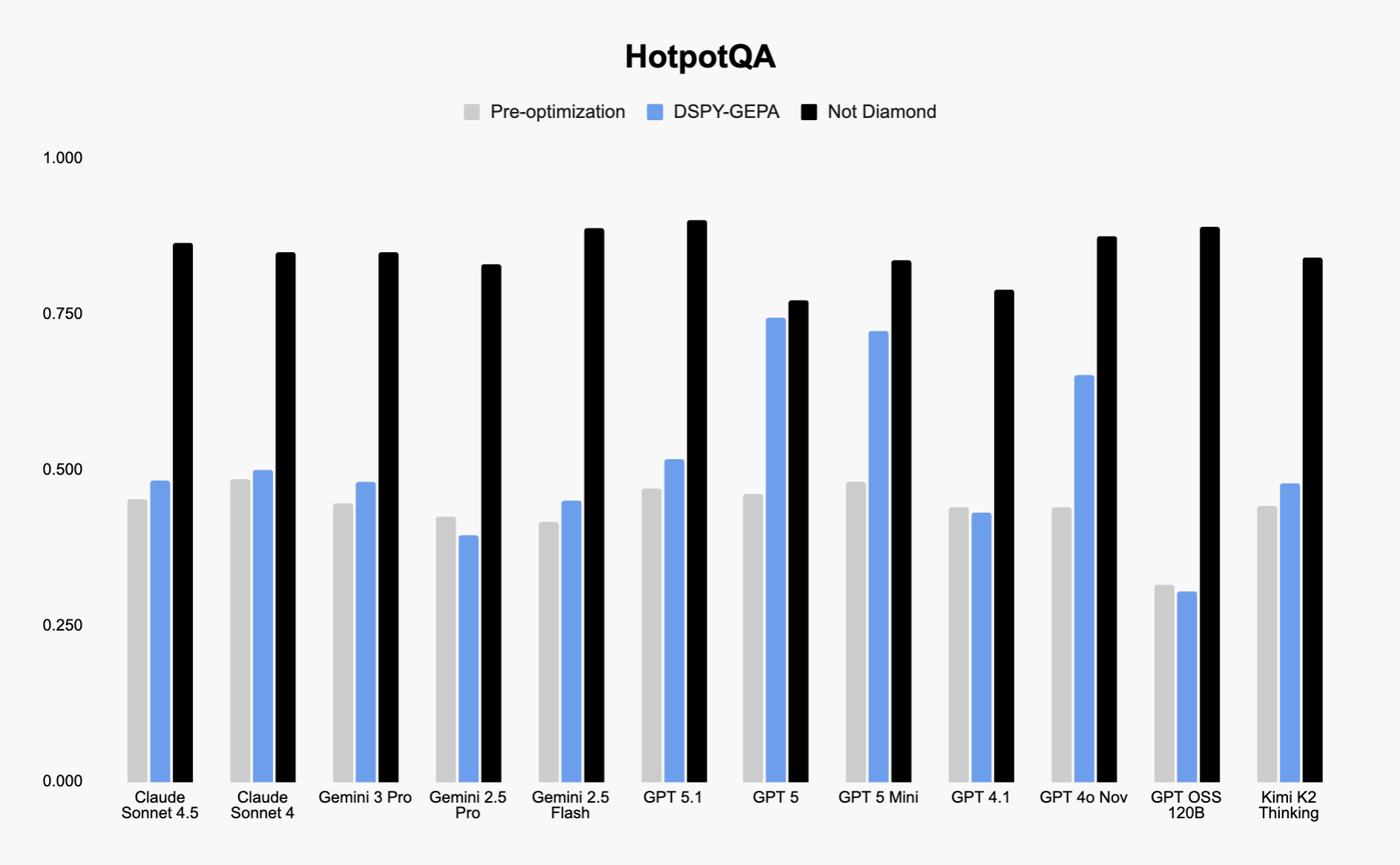

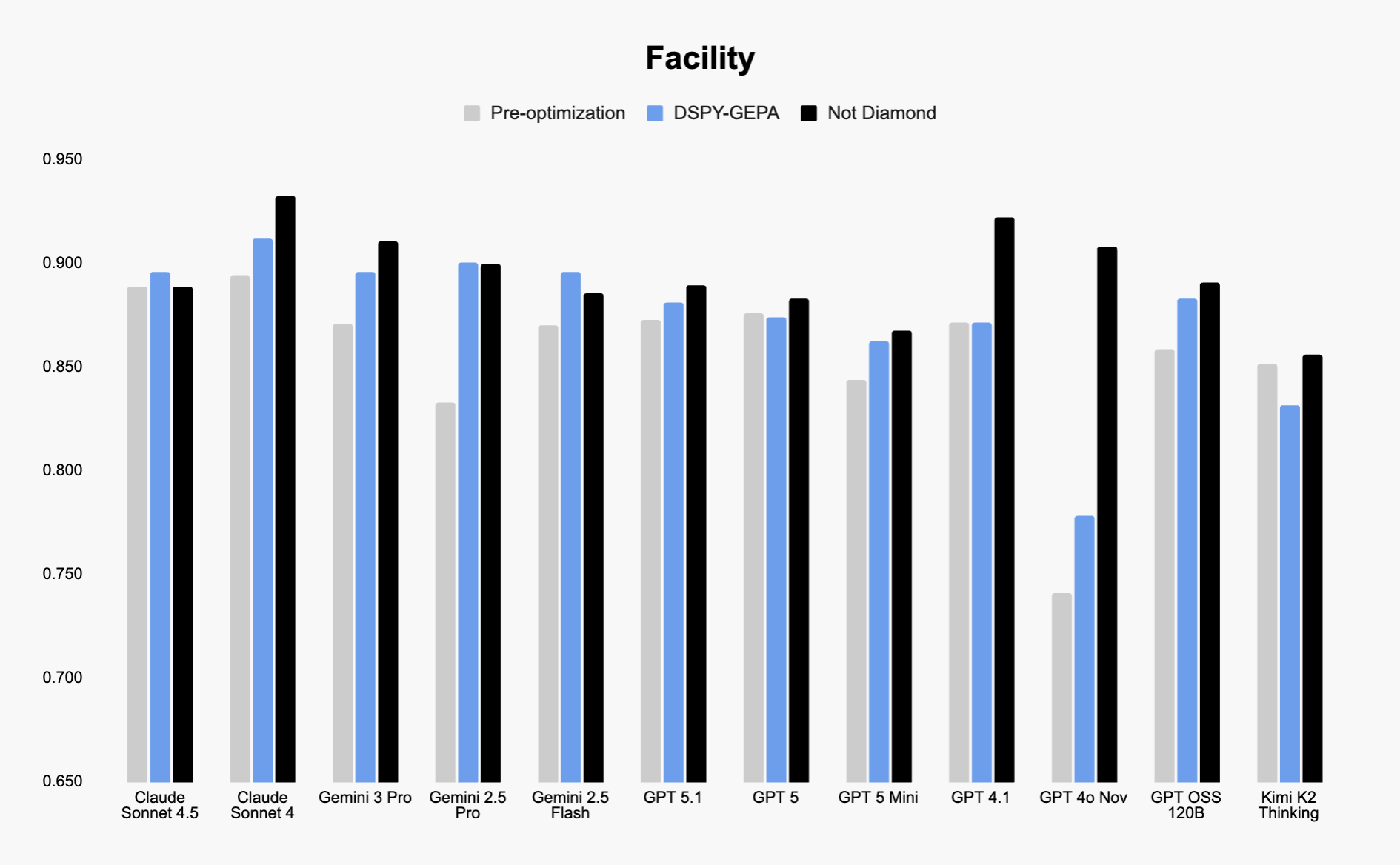

Prompt Optimization achieves state-of-the-art performance across public benchmarks and real-world task categories, including intent classification, retrieval-augmented generation, question answering, and structured outputs (Banking77, Clinc150, HotpotQA, and Facility). Across these benchmarks, our technique improves model accuracy by an average of 28.4% and outperforms DSPy-GEPA, a popular existing prompt optimization techniques, in over 85% of evaluated tasks. The gains reflect a more production-ready, easy-to-use and data-efficient optimization approach, allowing optimized prompts to be deployed directly in production without relying on framework-specific execution assumptions.

From weeks of manual work to minutes of optimization

Traditionally, adapting a single workflow to a new model can require more than 40 hours of manual prompt engineering to achieve equal or improved quality. Prompt Optimization automates that process.

Using evaluation-driven optimization, our system rewrites and tests prompt variants in the background, identifying the best-performing versions for each target model. In practice, optimization typically completes in 5 to 25 minutes of background processing, delivering optimal results that would otherwise take days or weeks of engineering effort.

SAP releases new prompt optimization features using Not Diamond

In parallel today, SAP released a new version of its prompt optimization features in AI Foundation, developed in close collaboration with Not Diamond.

This new version delivers significant gains in performance and data efficiency, enabling SAP customers to achieve higher-quality results while adapting more quickly to new models and changing workloads.

SAP customers can experiment with prompt optimization free of charge for 30 days through the generative AI hub trial in SAP’s AI Foundation. Additionally, each customer account receives the first 100 optimizations free. Token consumption and post-promotion costs apply.

What’s next: agent optimization and context curation

Prompts are only the starting point. The next bottleneck in production systems is the behavior of the full workflow: how an agent plans, which tools it calls, what data it retrieves, what it keeps in context, and what it leaves out.

That is why we’re extending our current research into end-to-end agent optimization and context curation: a data-driven layer that continuously improves entire agent workflows. Instead of tuning a single prompt in isolation, we tune the full system across the levers that actually drive outcomes in production.

This is where the long-term value accrues. As teams increasingly leverage networks of diverse models and agents in production, the most durable advantage will come from the optimization layer that learns from real evaluation data, improves with usage, and compounds over time. If you are struggling to improve complex agentic systems, please reach out to us for early access.

Prompt Optimization is generally available

Getting started with Prompt Optimization takes less than five minutes—simply sign up for an API key at app.notdiamond.ai and jump into our quickstart example to submit your first optimization request.